from google import genaiGemini Tutorial

Google Gemini API for Python

This tutorial is a subset of the lab assignment.

This part gives you your first experience using the Gemini Python API. We walk through the official tutorials and try to abstract away the complicated parts of the official documentation.

Google’s Gemini API uses a Python interface. This is unlike the Genius and Wikipedia APIs, which used special URLs to access data. To access the Gemini API, we install the google-genai, which installs related google packages.

Gemini API Key

We shared an API key with you through email. This individualized API key allows Gemini to recognize who is using their API.

Please DO NOT share your API key outside of this class. We will disable your API key (1) if you misuse it, (2) if you exceed the free usage tier during the project, and (3) for all students after the semester has ended.

If you’d like to play around with your code after the term, you’ll have to get your own API key. Ask us how to do this!

Remember that we avoid storing our API keys publicly. In api_key.py, we set my_client_access_token to be this string of alphanumeric characters. We then load it into our notebook environment as the Python name GOOGLE_API_KEY.

import api_key

GOOGLE_API_KEY = api_key.my_client_access_tokenOur first (two) API request(s)

So far, the Genius and Wikipedia APIs we’ve worked with have been what is called RESTful APIs, where we specify a URL to access structured data from an endpoint. After making this request, we then processed the JSON response. Now, we will directly use a Python library API, developed by Google as part of their Gemini Software Development Kit (Gemini SDK).

Using a Python API means that we will make a Python client object using Google’s genai package, then call that object’s methods to get data from Gemini. A Python API is often more convenient than a RESTful API when (1) the input and output are both quite flexible in format, as is the case for Generative AI data, and (2) when we write code that will make multiple calls to the API across different functions.

The cell below imports Google’s genai Python module:

From chat interface to API

We will first examine the example API request listed in the Gemini Quickstart Documentation (“Make your first request”). You are welcome (and encouraged!) to browse this documentation.

An AI chatbot is an application on top of a large language model (LLM). The LLM is what takes in user prompts and returns text responses. The chatbot is what filters input, perhaps converting and loading files with additional prompts, and returns filtered LLM responses back, perhaps with some HTML or Markdown formatting.

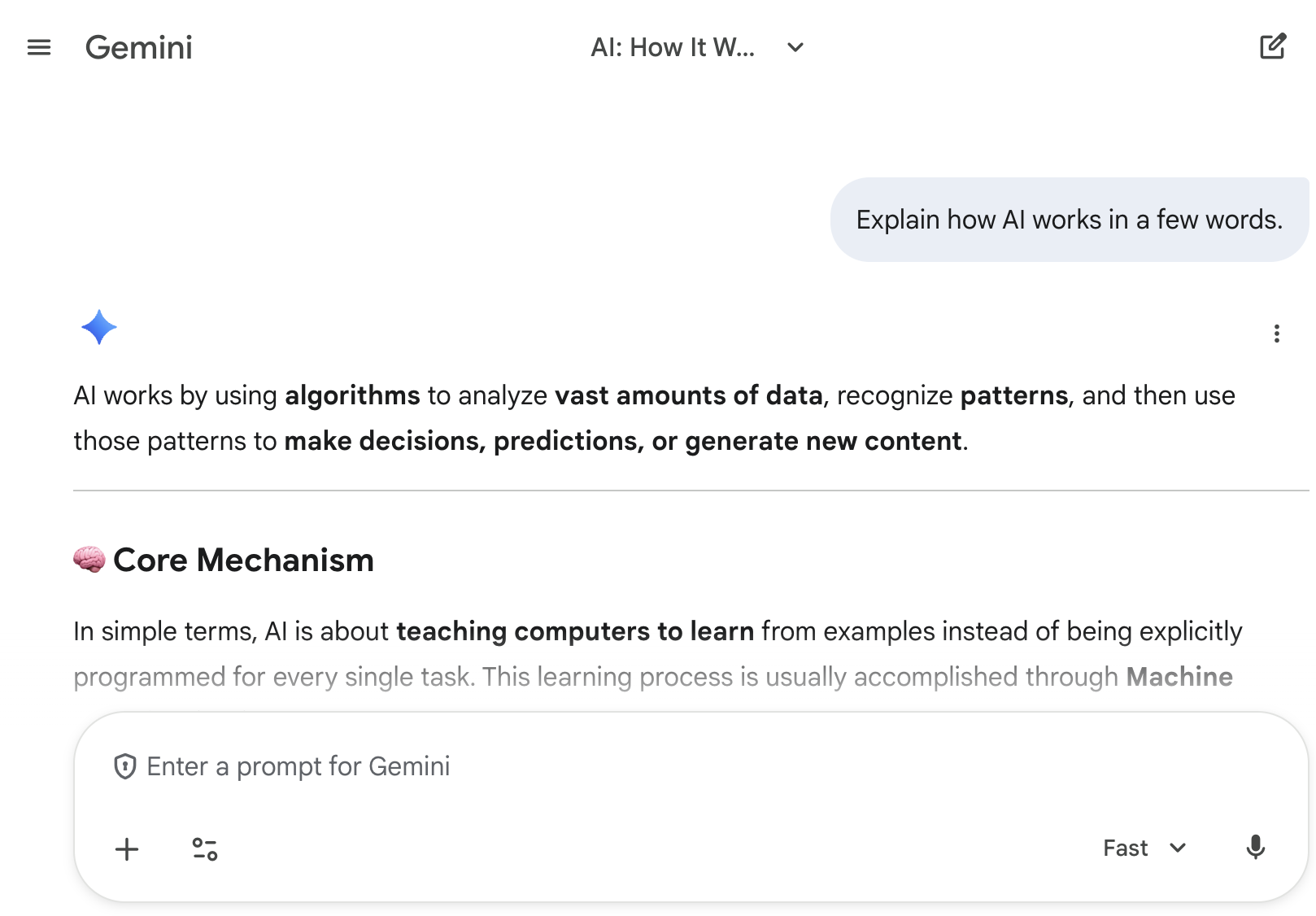

Consider the chat prompt shown in the screenshot, as well as (the start of) the model’s response.

We define three pieces of terminology to describe what is happening in the above screenshot:

- The user prompt: “Explain how AI works in a few words.”

- The model response: “AI works by using algorithms to analyze…”

- The specified model: Here, it is “Fast” (note the dropdown in the bottom right). The other option is “Thinking.” We’ll discuss this more later.

Create the client then make the request

The below code uses Gemini’s Python API to directly query the Gemini LLM with this prompt. We describe the behavior below the cell.

# first, create the client

client = genai.Client(api_key=GOOGLE_API_KEY)

# then make the API request

response = client.models.generate_content(

model="gemini-2.5-flash",

contents="Explain how AI works in a few words"

)

print(response.text)It learns patterns from data to make decisions or predictions.What just happened (and how you would make these API calls):

Make an API client: Make a Gemini client object called

client, which passes in your API key. (We will do this for you usually.) This client lets you access different API calls via its methods. We will focus on one specific method in this class,generate_content.Make the API request. Call

generate_content, available in viaclient.models. This cell takes in several arguments, which we specify by name:model: The underlying large language model. Here we specify Gemini 2.5 Flash, which is equivalent to the “Fast” option in the Gemini chatbot.contents: The prompt: “Explain how AI works in a few words.” We discuss the type ofcontentslater.

Receive a response.

responseis a Gemini-specific object type. The details are too complicated for this course. Instead, we focus on the valueresponse.text, the LLM response string itself.

Make another API request

Once we have created the API client, we do not need to remake the client. Instead, we can just make more calls to generate_content.

Remember—LLMs are random response generators, so you will likely get a different response back!

# client is already created, so just call generate_content

response = client.models.generate_content(

model="gemini-2.5-flash",

contents="Explain how AI works in a few words"

)

print(response.text)It learns from data to find patterns and make smart decisions.Different Gemini Models

You can specify different Gemini models to get different responses. Some more advanced models will produce higher-quality responses, though with the tradeoff that each response will take longer and be more expensive.

We recommend a few models for this course:

- “Fast” Gemini 2.5 Flash model (

"gemini-2.5-flash"). Pretty fast, though depending on the time of day responses might still take a few seconds. Reasonable cost. - “Thinking” Gemini 2.5 Pro model (

"gemini-2.5-pro"). More in-depth responses, though responses will take significantly longer than Fast responses. Expensive. - Extra fast: Gemini 2.5 Flash-Lite (

"gemini-2.5-flash-lite"). Very fast, very cost effective.

Gemini Pro not only has longer request times. It is also more expensive per request. We strongly recommended testing out prompts with Gemini 2.5 Flash or Gemini 2.5 Flash-Lite. Once you are satisfied with your prompt, only then should you change models.

We use Gemini 2.5 Flash for the remainder of this tutorial.

Prompt Engineering

Prompt engineering is the process of structuring or crafting a prompt (natural language text instruction) in order to produce better outputs from a generative artificial intelligence (AI) model (source: Wikipedia).

Provide context

What is the best restaurant in Berkeley?

response = client.models.generate_content(

model="gemini-2.5-flash",

contents="What is the best restaurant in Berkeley?"

)

print(response.text)Defining the "best" restaurant is always subjective, as it depends on your personal preferences, budget, occasion, and desired cuisine!

However, if we're talking about **iconic status, culinary influence, and consistently high-quality, seasonal Californian cuisine,** the answer almost always starts with:

1. **Chez Panisse:** Founded by Alice Waters, this restaurant is legendary. It practically invented the farm-to-table movement in America. Expect a refined dining experience with a multi-course, prix fixe menu that changes daily based on the freshest ingredients available. It's an investment, but for many, it's a pilgrimage and a true taste of California cuisine history.

* **The Chez Panisse Cafe** (upstairs) offers a more casual (though still excellent) and slightly less expensive a la carte experience.

Beyond Chez Panisse, Berkeley has a fantastic and diverse food scene. Here are some other highly-regarded restaurants that excel in different categories:

**For a Lively, Popular Vibe & Delicious Food:**

* **Comal:** Excellent Mexican cuisine, vibrant atmosphere, fantastic mezcal cocktails, and a great outdoor patio. Very popular, so reservations are recommended.

* **Cesar:** A lively tapas bar and restaurant with Spanish-inspired small plates, excellent cocktails, and a great wine list. Perfect for sharing and a bustling atmosphere.

**For Excellent Italian:**

* **Corso:** Rustic Italian cuisine with a focus on house-made pasta and wood-fired dishes. From the same owners as Comal, so expect quality.

**For Japanese (Izakaya/Yakitori):**

* **Kiraku:** Fantastic izakaya (Japanese gastropub) with a huge menu of small plates, grilled items, and sake.

* **Ippuku:** Specializes in yakitori (grilled skewers), with a focus on different cuts of chicken and vegetables. A more intimate and specific experience.

**For Modern American/Seasonal:**

* **Gather:** A popular spot known for its delicious, seasonal, and often vegetarian-friendly dishes, with a focus on sustainability. Great cocktails too.

**For a Unique & Beloved Local Experience:**

* **The Cheese Board Pizza Collective:** A Berkeley institution. They serve only one type of vegetarian pizza per day, sold by the slice or whole pie. Long lines, but incredibly delicious, unique, and often features live music outside. It's more of a collective/takeout experience with some standing room.

**For French (Especially Brunch):**

* **La Note:** Charming French bistro, famous for its incredible brunches, but also offers lovely dinner service.

**For Chinese (Peking Duck):**

* **Great China:** Known for its excellent Peking Duck and a wide array of traditional Chinese dishes. Can be very busy.

**To help me narrow it down to the "best" for *you*, tell me:**

* **What kind of cuisine are you in the mood for?**

* **What's your budget (e.g., casual/inexpensive, mid-range, fine dining/splurge)?**

* **What kind of atmosphere are you looking for (e.g., romantic, lively, casual, quiet)?**

* **What's the occasion?**The LLM response looks too general; these recommendations might not be great for the average college student. One core aspect of prompt engineering is to provide more context to the prompt.

Context can help define:

- What information you are looking for in the response, e.g., “Only include cheap meals under $15, and only consider restaurants that are open past 9pm.”

- How long you want the response to be, e.g., “Limit your response to 200 words.”

- What tone you want the response to have, e.g., “Imagine you are a UC Berkeley student talking to a fellow classmate.”

- How to structure the response: markdown, comma-separated, JSON, etc., e.g., “Format your response as a bulleted list.”

Note: Include linebreaks (with newline characters '\n', or use multi-line strings) to delineate different aspects of the prompt.

One way to provide detailed context is to just pass in a very, very long string as your prompt. See the example below. Note that the triple quotes (""") allows you to specify a multi-line string, with line breaks.

response = client.models.generate_content(

model="gemini-2.5-flash",

contents="""Imagine you are a UC Berkeley student talking to a fellow

classmate.

What is the best restaurant in Berkeley? Only include cheap meals

under $15, and only consider restaurants that are open past 9pm.

Format your response as a bulleted list. Limit your response to 200 words.

"""

)

print(response.text)Alright, if we're talking best cheap eats under $15 that are open past 9 PM, especially after a late study grind, these are my go-to spots:

* **Gypsy's Trattoria Italiana:** Their pasta bowls are legendary – huge portions, super tasty, and easily under $15. Perfect for a cozy, filling meal late into the evening.

* **Top Dog:** An absolute Berkeley institution. Grab a couple of their amazing hot dogs for less than $10 total. They're quick, delicious, and open super late, often past midnight!

* **Artichoke Basille's Pizza:** Forget tiny slices; one of their massive New York-style slices is a whole meal, usually under $8. Greasy in the best way and open crazy late, especially on weekends.

* **King Dong (on Telegraph Ave):** For fast, cheap, and satisfying Chinese food, you can't beat King Dong. Huge combo plates for around $10-12, and they're reliably open late for that midnight craving.Word count:

# this uses regular expressions, which we don't cover in this course

import re

def split_into_words(any_chunk_of_text):

lowercase_text = any_chunk_of_text.lower()

split_words = re.split(r"\W+", lowercase_text)

return split_words

len(split_into_words(response.text))155Construct a prompt as a list of parts

As we’ve seen in this class time and time again, we want to reuse pieces of code, data, etc. The same is true of our prompts! A formatting context from one prompt may be very useful for an entirely different application, and it would be great to reuse the context string instead of duplicating string literals in different parts of our code.

The Gemini API supports multiple argument types for content. For this course, we focus on providing additional context to our prompts by passing in a list of strings to content. Passing in a list of strings is comparable to separating context parts with linebreaks and allows us to reuse parts of our context.

The below code specifies the same prompt as before but now with a list of strings passed into content. (Again, because LLMs are random text generators, the response may be different from before.)

context_character = "Imagine you are a UC Berkeley student talking to a fellow classmate."

context_format = "Format your response as a bulleted list. Limit your response to 200 words."

response = client.models.generate_content(

model="gemini-2.5-flash",

contents=[

context_character,

"""

What is the best restaurant in Berkeley? Only include cheap meals under $15,

and only consider restaurants that are open past 9pm.

""",

context_format

]

)

print(response.text)Yo, "best" is a tough call, especially with our student budget and late-night cravings! But if I had to pick for cheap eats *and* open past 9 pm, here are my top contenders that have saved me countless times:

* **Sliver Pizzeria:** A quintessential Berkeley experience. Their rotating veggie slices are always fresh, filling, and only like $5. They're usually open 'til 10 or 11 PM, later on weekends. Perfect study break fuel.

* **Top Dog:** An absolute institution. Grab a delicious sausage for under $5. It’s quick, cheap, and they’re open until 2 or 3 AM most nights. Ideal for a late-night bite near campus.

* **La Burrita:** For solid, satisfying Mexican, this place is clutch. A massive burrito will set you back around $10-12 and keep you full. They usually close around 10 or 11 PM, so perfect for that post-GSR hunger.

* **Cafe Durant:** When you need classic diner grub super late, Cafe Durant is your spot. You can get a full, greasy meal – think burgers, fries, or even breakfast platters – for well under $15. They’re often open until 2 AM or later!Word count:

len(split_into_words(response.text))191See the prompt engineering resources below for how to construct prompt for a variety of tasks.

Additional Reading

Official Documentation:

Prompt Engineering Resources:

- Gemini API: Prompting Strategies

- Ziem et al., Table 1: LLM Prompting Guidelines to generate consistent, machine-readable outputs for CSS tasks. Very useful for project.